Last Updated on 19th July 2022

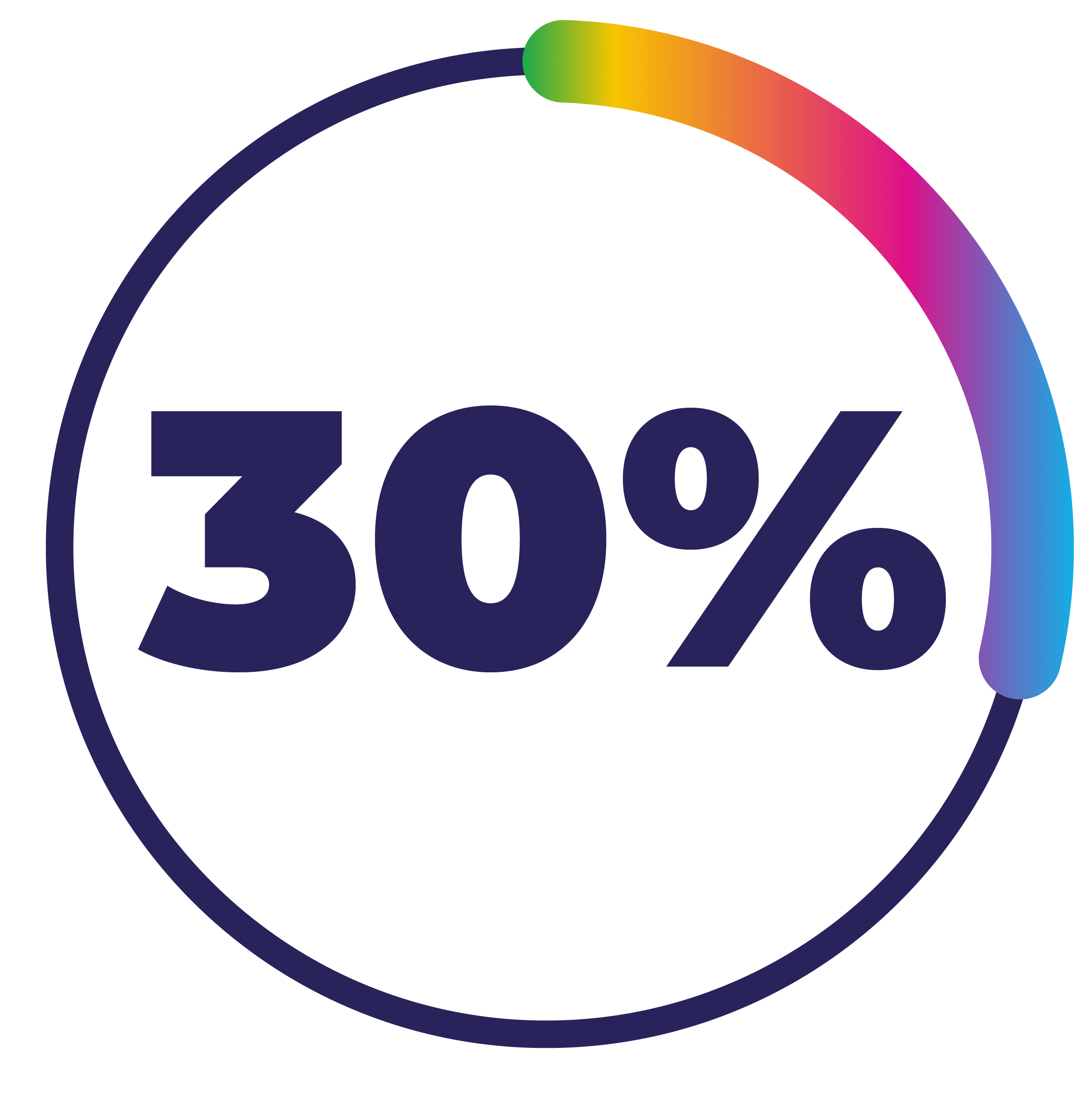

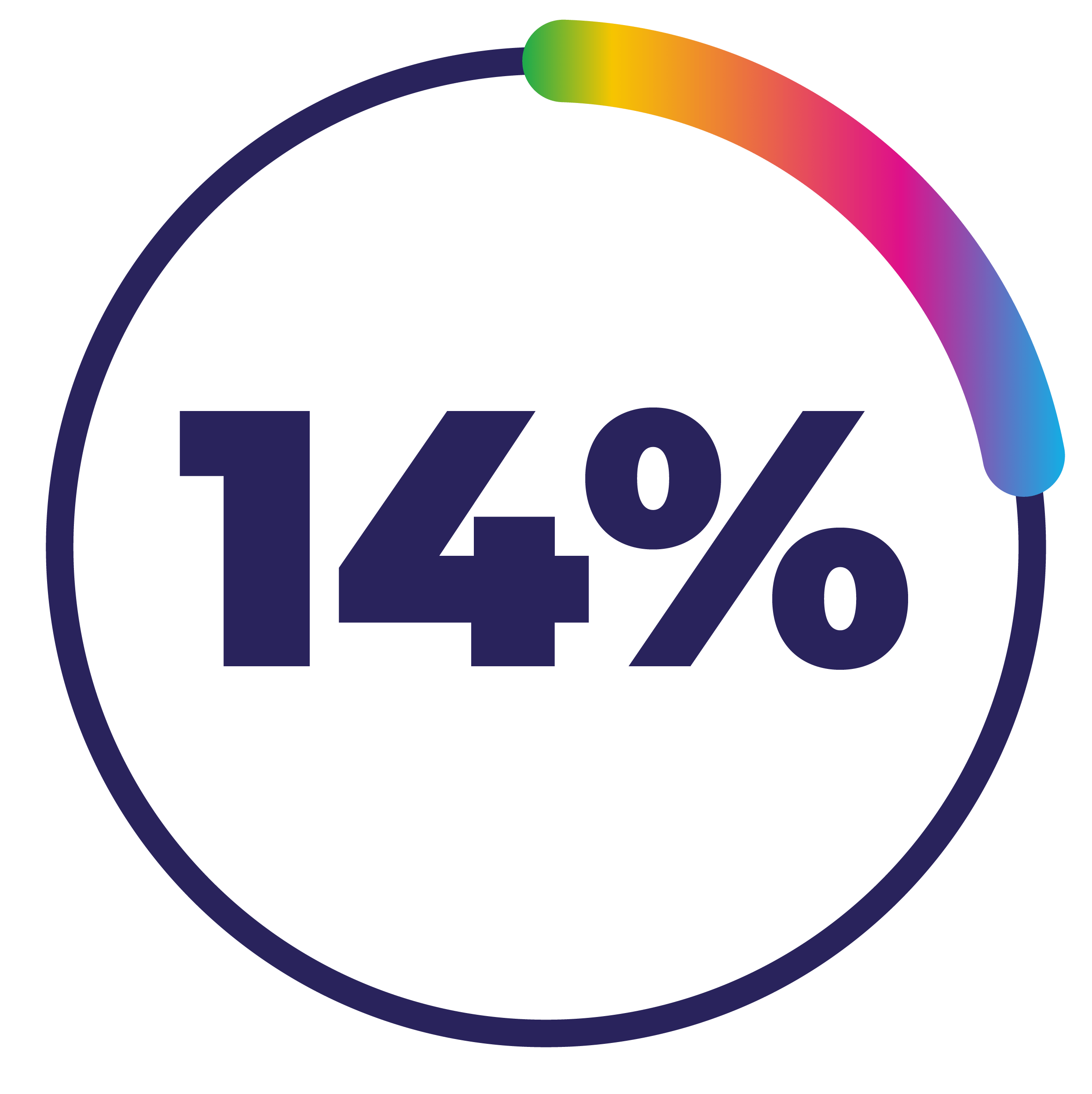

According to the latest Online Nation report by Ofcom, only 14% of young people (13-17 years old) are reporting and flagging potentially harmful content or behaviour they see online. This article investigates why our children and young people aren’t reporting online abuse and inappropriate content and why we should all be more proactive when it comes to making the internet a safer place to be.

Young people keeping secrets is nothing new, especially if it involves something distressing. Think back to your own school days – did you always tell your parents or teacher if someone said something mean to you in the playground? Or if you accidently saw something inappropriate on TV?

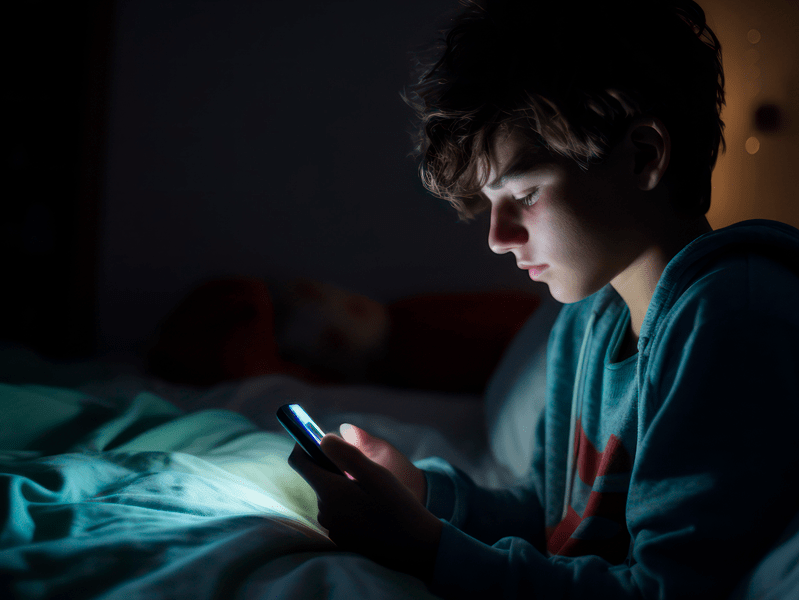

What has changed is that there are now many more mediums on which a child or young person might come across harmful content, online abuse and age in-appropriate content. From scrolling social media in bed in the mornings, to being shown something on their friend’s phone during lunchbreak through to playing an online game to unwind in the evening, the likelihood of exposure has greatly increased.

When it comes to children and young people seeing harmful content online, it’s not just about reporting to and discussing that with parents, teachers or other trusted adults. Almost every social media site, app, platform and gaming console have features in place for reporting and flagging that content. However, according to the latest research from Ofcom, it seems very little children and young people are doing so.

What Inappropriate Content Are Children and Young People Seeing Online?

Unfortunately, children and young people are likely to be the target of some types of inappropriate content and abusive behaviours that adults won’t be. This includes grooming attempts, targeted exposure to sexual materials and cyberbullying from their classmates or peer group.

Other harmful content will be similar to that experienced by all ages , such as scams, misinformation and trolling. However, they may be adjusted to account for age. For example, a scam targeted at an online user aged in their 70s will likely take a very different form than one targeted at a young person, which might involve fake crypto- or in-game currency.

Even if we’re occupying the same online spaces as young people, in reality we’re probably seeing very different things. Most apps and platforms work by algorithms – they gather information about what we like and dislike every time we use them and then utilise that information to show us more of the content we like in the future, whether that’s a topic we frequently search for or the type of profiles we interact with online.

So, while you and your child might both be scrolling Instagram and watching TikTok videos, what content you’re being shown is likely to be very different. Furthermore, the Online Nation 2022 report found that the type of potential harms encountered by each age group was different. Younger adults are more likely to face hateful, offensive, or discriminatory content, whilst users aged 55+ are more likely to encounter scams, fraud, or phishing.

The reason why this is important is that it might be easy to mistakenly assume that the online world we experience is the same as the one the children and young people in our care do. It’s also important to note that children and young people will be in different stages of developing emotional and mental coping skills. Harmful or inappropriate content for children of any age can pose a safety risk. However, viewing this content may be more traumatic for some than it would be for others.

Why Don’t Children and Young People Report Online Abuse and Harmful Content?

Shame and Embarrassment

Becoming a target for bullying or abuse can be a source of embarrassment for a child or young person and they may internally blame themselves for being a victim. They may feel like they’re too different from others, have done something wrong or that there’s something ‘wrong’ with them. It can create feelings of shame that makes it difficult to broach the subject with anyone. They may also be worried about being bullied further and possible retaliation if they tell someone.

If a child or young person has accidently seen or been sent something that has sexual context, they might be hesitant to bring it up due to feeling awkward about the subject matter.

Getting in Trouble

Children and young people may choose not to report online abuse and inappropriate content because they’re worried there may be consequences for themselves.

For example, they could be concerned about their parents’ reactions: will they be cross and think the child was looking at something online they shouldn’t be? If their child was using a platform they shouldn’t have been on as they aren’t quite old enough, will the parents remove their device access, leaving them feeling isolated from their friends? Will their parents think of them differently because they’ve brought up the topic of sex or child sexual abuse?

Children and young people may also misunderstand the legalities around harmful illegal content and think or be unsure about whether they will be in trouble for simply viewing it. They may worry about the police or their school getting involved.

Also, if a child or young person reports content, they might be unsure of what happens next in that process and have concerns about maybe getting in trouble with that app or platform. We know that some children under the age of 13 years old will use apps and social media sites for those 13 years of age and older, and they might be worried about being ‘kicked off’ and banned from the site, leaving them digitally excluded.

Built-up Tolerance and Acceptance

Unfortunately, children and young people may just be ‘used to it’ when it comes to seeing inappropriate and harmful content online. The prevalence at which they encounter this type of material or come across abusive content might mean that it’s accepted as part of the normal experience of being online and just ‘background noise’ they block out.

They may also think there’s no point to reporting it. In the Online Nation Report 2022, 22% of users said they did not take action as they didn’t think that doing so would make a difference.

Not Knowing What to Do

Every app, platform and website have their own process for dealing with harmful content and a different interface for doing so. Although many children and young people are very tech-savvy, this doesn’t mean that they are all equipped with the knowledge of how to report or flag things online.

This list is not exhaustive – every child and young person is different and will have their own reasons, based on their circumstances, experience and personality.

How Can I Encourage Children and Young People to Report Online Abuse and Inappropriate Content?

Talk – and Listen!

Talking to a child or young person about how to stay safe online may help reduce the chances of them viewing inappropriate content or being susceptible to grooming etc. By being proactive and learning about the risks of the digital world, you’re arming yourself with the tools you’ll need to have helpful, productive conversations with the child or young person in your care. Set family rules and boundaries about being online.

Build a relationship in which the child or young person in your care feels secure and confident to confide in you and openly discuss things that upset them.

Encourage conversations about online experiences, both good and bad. When it comes to talking about harmful content, avoid going into details about what sorts of content that could be but rather focus conversation on the action that can be taken, such as reporting, blocking and using safety settings. Talk about boundaries and come up with family rules for internet use together.

Ask the child or young person in your care the reasons they might not report abusive or inappropriate content online. Reassure them that simply reporting content won’t get them in trouble nor will talking to you about something they’ve seen online that upsets them or makes them feel uncomfortable. Make sure they know who their Trusted Adults are.

If your child does come to you to talk about something they’ve seen online, stay calm. Although you might be shocked or worried about something they tell you they have seen, or even angry at them for using a site or app you told them not to, having a strong emotional reaction may put them off coming to you again in the future.

Learn Together

In order to keep the internet a safer place for the children and young people in our care, we need to make a collective effort and take steps to ‘clean up’ the digital online litter.

Even if you already know how to report and flag this type of content, sit down with the child or young person in your care and go over exactly how to do this on each platform. Learn about the process together using our Safety Centre. Remember, lots of people are visual learners so have your phone or digital device handy so you can all see exactly where the buttons are on the actual platform.

Be An Example

One of the best things we can do to help children and young people stay safer online is to lead by example. Just like in the offline world, the children in your care look to the trusted adults around them for guidance on how to navigate, react and interact in this world.

However, unlike in the offline world, using digital devices and being online is often a solitary activity. This means you may need to make a more conscious effort to show and talk to the children in your care about what you do when you come across harmful content and bad online behaviour. This could be as simple as mentioning that you saw a bullying comment online, so you reported it to the platform.

If you’re someone who usually scrolls past abusive or harmful content, ask yourself, ‘would I want my or any child to see this?’. If the answer is no, take action!

Join our Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.