Last Updated on 12th May 2023

Read the script below

Colin: Hello and welcome to Safeguarding Soundbites.

Natalie: This is the safeguarding news podcast that brings you all the past week’s online safeguarding news…

Colin: And advice. My name’s Colin.

Natalie: And I’m Natalie.

Colin: This week, we’re going to be talking TikTok advertising, how the Online Safety Bill could cause WhatsApp to leave the UK, and official health advice on children’s social media use.

Natalie, why don’t you kick us off this week by telling us what’s been happening in the world of social media?

Natalie: Sure! We’ll start with TikTok who have had a few issues around advertising on the platform this week. First, two posts advertising vapes have been banned. The ads were posted by two different influencers who were promoting particular brands of vapes.

Colin: What’s interesting is that we recently published an article about this – how social media can be a factor in influencing young people to vape.

Natalie: Yes, we did! Just a few weeks ago. And that’s because healthcare officials have warned that youth vaping is on the rise, despite it being illegal to sell vapes to under 18-year-olds.

Colin: It’s become a trend – and if young people are seeing people they admire or follow promoting vapes on social media platforms like TikTok, that’s actually just going to encourage that trend.

Natalie: Exactly. And nicotine is, of course, addictive but it’s also important to note that it’s particularly dangerous for children and young people. Some experts are saying it’s harmful to brain development for under 25’s. You mentioned our article, Colin, could you tell us a bit more about that?

Colin: Yes, so our listeners can find that on our website at saferschoolsni.co.uk and on the Safer Schools NI app.

Natalie: Which is free to download right now!

Colin: It is! On whatever app store your device uses. But yes, the article is essentially a full guide to everything parents, carers and teachers might want to know about youth vaping – what it is, why it’s harmful, why young people might vape and also some really great top tips in there, too.

Natalie: Great, thank you! So the other story that’s been in the news about advertising on TikTok is that a new report has come out on medical misinformation on the platform. A study presented at the Digestive Disease Week in Chicago this week showed that four in 10 posts about liver disease on TikTok contain misinformation.

Colin: That’s really high!

Natalie: It is! The most common misinformation was about how to heal liver disease – using herbal products, eating certain foods like beef liver or even doing parasite cleanses.

Colin: I don’t want to know what the last one involves!

Natalie: You really don’t!

Colin: But I suppose this speaks to a wider problem with misinformation on social media sites like TikTok?

Natalie: It does. And this is something I think that parents, carers, teachers…anyone in a position of safeguarding needs to be aware of – that children and young people may be exposed to or even seek out medical advice and information on social media.

Colin: Actually, not too long ago, we reported in our Daily Safeguarding News, about young people turning to TikTok to search for advice more than Google.

Natalie: Yes. And even beyond social media, there have been reports of children and young people using AI like ChatGPT to find medical advice, in particular mental health advice.

Colin: In some cases, that might be due to feeling embarrassed or like they can’t go talk to someone about their mental health or they’re worried about the consequences of having that conversation.

Natalie: Which is why it’s so important to let the children and young people in our care know: a) who their trusted adults are…the adults in their life that they can go talk to when they’re feeling upset or worried about their health or anything. And b) that we open up those conversations ourselves and let them know it’s okay to talk about health, whether that’s mental health or physical health.

Colin: And just to add to that also, Natalie, that we talk about the importance of seeking professional medical advice from a doctor or a school nurse and that there is misleading and inaccurate medical information on social media.

Natalie: Yes, great point!

Colin: Okay, moving on to other social media news now and ministers have been warned that the messaging platform WhatsApp could leave the UK if the current form of the Online Safety Bill goes through.

Natalie: This is to do with end-to-end encryption, is that right, Colin?

Colin: Yes…so basically, end-to-end encryption is a system of communication used that keeps messages private between the sender and the person or people receiving that message.

Natalie: In other words, if I send you a message on WhatsApp, it’s just between you and I. No one else can access it?

Colin: Exactly. And that’s why it’s a bit of a hot button topic – the Online Safety Bill is asking for tech companies to take responsibility for detecting child sexual abuse material (CSAM) and terrorism content on their platforms, but the tech giants say that breaking end-to-end encryption would breach the public’s human rights.

Natalie: So on the one hand, you have people and companies like WhatsApp who are concerned about end-to-end encryption and outside parties being able to access information sent between users…

Colin: And the other, it’s this protection from third party access that many child safeguarding campaigners are worried actually protects predators because the law can’t see the content they are sending.

Natalie: And so services like WhatsApp might leave the UK if the bill won’t explicitly promise to protect end-to-end encryption on platforms like theirs, as that’s one of their main features – that users’ messages are kept private.

Colin: Which has been a conversation this week in Parliament. Well, more of a row!

Natalie: A lively debate!

Colin: Yes, we’ll go with that! And WhatsApp have also been in the news after screenshots appeared showing the app’s microphone supposedly ‘listening in’ on devices. The screenshots showed notifications that the messaging app was attempting to access the microphone on Android devices during times when users would be asleep. However, WhatsApp have said the cause is a bug in Android systems, rather than a creepy attempt to listen to you snore! If you are worried, however, you can simply turn your microphone off in your phone’s privacy menu settings.

Natalie: In other news, official data has shown that the UK is the online child abuse capital of Europe, with police being passed over 300,000 online child abuse reports last year. That’s an increase of 225% compared to the year before. The reason for increase in reports has been linked to both improved detection and reporting by tech companies but also a rise in online child abuse linking back to the Coronavirus pandemic, where many children spent extra time online that was often unsupervised.

Colin: Former head of child safety online policy at the NSPCC Andy Burrows says the worrying rise illustrates the need for the introduction of the Online Safety Bill as soon as possible. But it also, Natalie, reinforces the important need to educate and empower our children and young people with the knowledge of how to stay safer online.

Natalie: The American Psychological Association – or APA – have issued their first ever health advice on social media use by children. The report outlines how children and young people can be impacted by using online social networks.

Colin: Online social networks meaning social media?

Natalie: Essentially, yes.

Colin: Ah okay. Did the report say that social networks are harmful?

Natalie: Not outright – the APA said that online social networks are not inherently beneficial or harmful to young people but that they should be used with consideration. They recommended that parents and carers address children’s habits and routines on social media and remain vigilant to stop social media interrupting sleep routines and physical activity.

Colin: We’re all about healthy screentime routines here at Safer Schools NI!

Natalie: We are! The APA also condemned social media algorithms that push young people towards harmful content.

Colin: For our listeners that aren’t sure, can you tell us more about social media algorithms?.

Natalie: Yes, for example, if I watch a TikTok video about beagle puppies, the algorithm will register that I might like to see more of this, and will show me similar videos. If I interact with these similar videos, the algorithm starts to recognise that I enjoy viewing this type of content and will continue to push this into my feed.

Colin: Well, we would all be happy if young people’s social media was filled with beagle puppies, but what happens if they watch something more harmful like self harm, eating disorder or suicidal ideation content?

Natalie: This is the problem. It means children and young people can end up caught in a loop of viewing harmful content on social media.

Colin: Although this was the American Psychological Association, the advice in the recommendations apply just as much here in the UK. What are some of the take home lessons from their recommendations?

Natalie: So as well as ensuring the child or young person in your care has healthy screen time habits, they also recommended limiting the time young people are spending comparing themselves to others, in particular around appearance and beauty related content.

They also suggested regularly screening for problematic social media use, looking out for addiction-type behaviours, such as spending more time on social media than intended or lying to get access to social media.

And to implement an age-appropriate level of monitoring through parental controls and – and this is really important but often adults find this difficult to do – for parents and carers to model healthy relationships with social media.

Colin: Yes, we definitely recommend that too!

Natalie: Finally, it’s time for our safeguarding success story of the week! Take it away, Colin!

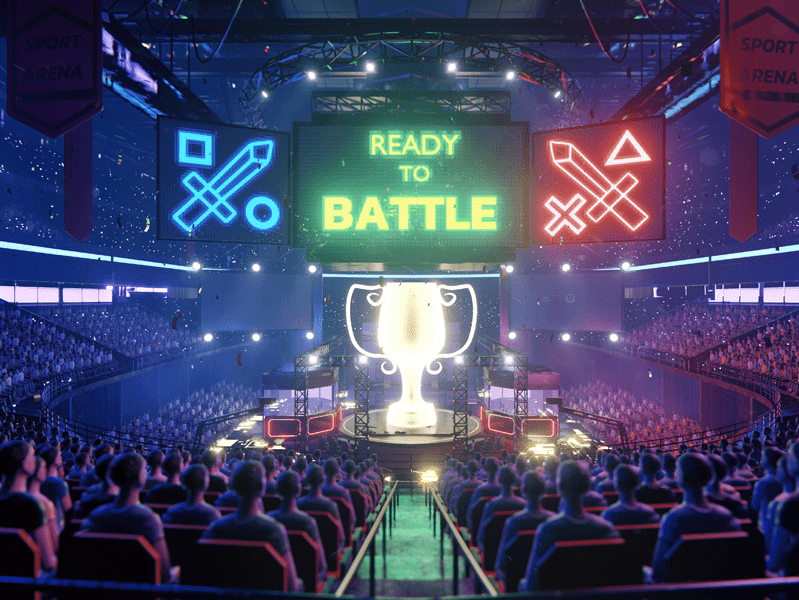

Colin: Yes, our good news story this week is about Northern Ireland’s all-girls team of gamers! From St Mary’s College in Derry/Londonderry, the team have won a UK gaming industry journalism prize for an article about their experiences in esports as girls. Esports – that’s electronic sports or competitive gaming – is a very male dominated sport so it’s awesome that not only is this team challenging that stereotype but now they’re taking on gaming journalism too! You can find out more about esports by visiting our website or on the Safer Schools NI App.

Natalie: Fantastic! Well that’s all for this week – remember that you can keep up to date with us on our social media by following Safer Schools NI.

Colin: And you can find all of our articles, plus safeguarding advice, need-to-knows and so much more on the Safer Schools NI App.

Natalie: Until next time…

Colin: Thanks for listening and stay safe!

[Both]: Bye!

Join our Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.

Who are your Trusted Adults?

The Trusted Adult video explains who young people might speak to and includes examples of trusted adults, charities and organisations.

Pause, Think and Plan

Use our video for guidance and advice around constructing conversations about the online world with the children in your care.