Last Updated on 12th January 2024

Read the script below

Natalie: Hello and a very warm welcome back to 2024’s first episode of Safeguarding Soundbites!

Colin: Happy new year, Natalie!

Natalie: You, too. And to all of our listeners as well.

Colin: Yes, we hope you had a lovely break and are feeling well rested and ready for the year ahead.

Natalie: And another year of Safeguarding Soundbites!

Colin: Yep, and for any new listeners, I’m Colin and this is the weekly podcast that brings you all the latest safeguarding news, updates and advice. This week I’m joined by Natalie.

Natalie: You sure are. So what have we got coming up today, Colin?

Colin: Today we’re going to be talking about the latest Meta measures to protect children and young people from harmful content, the tragic story of an assault in the Metaverse and catching up on some other headlines.

Natalie: Okay, you kick us off then, Colin.

Colin: First up in social media news and Meta have announced they are introducing new measures to hide content about suicide, self-harm and eating disorders for users under 18.

Natalie: Is this on Facebook?

Colin: This will be on Facebook and Instagram.

Natalie: Ah okay. So those users, those young people, just won’t see that type of content? What happens if one of their friends or someone they’re following posts something about, for example, eating disorders?

Colin: So, Meta have said that those users won’t see the content at all. They’ve also said that if a user post about self-harm or eating disorders, like you say, mental health resources from charities will be displayed. They’re also going to be automatically putting the accounts of under 18s into the most restrictive settings.

Natalie: Oh I thought they already did that!

Colin: For new users, yes. But Meta says they’ll now be moving all users, including existing users, into those settings. And – again they already partly do this but this is an expansion – they’re going to work on hiding more of this type of content if people search for it on Instagram.

Natalie: So if someone searches ‘self-harm’ or the like on Instagram?

Colin: Then in theory, instead of seeing other posts about that, they will be directed to expert resources.

Natalie: Okay. Are all these changes live now?

Colin: No, the new features are being rolled out in the next few months, so we’ll definitely be keeping an eye out for them and taking a look at how effective they are.

Natalie: And come back to you, listeners, on what we find!

Colin: Absolutely. It’s also worth noting that several critics have spoken out about this already, saying that essentially this doesn’t go far enough. An advisor for the Molly Rose Foundation has described this as a piecemeal step when a giant leap is urgently required and the executive director of online advocacy group Fairplay has said this is an “incredible slap in the face to parents who have lost their kids to online harms on Instagram” and pointed out that if Meta is capable of hiding pro-suicide and eating disorder content…well, why have they waited until 2024 to make these changes?

Natalie: Hmm that’s kind of a fair point there…it’s not as if this hasn’t been brought up before as an issue – a serious issue.

Colin: It is a very good question and I wonder if Meta really has an answer for that. Okay, moving on from that one. Natalie, what has piqued your interest in safeguarding news this week?

Natalie: Well, I want to discuss this story that’s been all over the news really for the last week or so. It is a sensitive subject so if anyone’s listening with children about, like in the car with them, I would maybe suggest coming back to this a little later.

Colin: So hit pause on the podcast now.

Natalie: So this is the story that’s been hitting the headlines about the Metaverse. An investigation has been launched into reports that a young person under 16 has been sexually assaulted while playing a virtual reality Metaverse game. Now, I’ve played virtual reality games, with the VR headset and I can only imagine how traumatic this experience was – everything feels very real with that headset on.

Colin: It does. You said an investigation?

Natalie: Yes, a police investigation, and this is believed to be the first time there’s been one in these kinds of circumstances…in terms of a virtual sexual assault.

Colin: But certainly not the first time we’ve heard about sexual harassment taking place in the Metaverse.

Natalie: Sadly, no. And remember, it’s a really immersive experience – it’s designed to be that way, to make you feel as if you’re physically having those experiences in real life. So this isn’t something to be dismissed as ‘not real’. Also, this is someone under 16 years old, there are big questions raised here about why a game, virtual reality or not, is putting a child in circumstances in which their avatar or character can be exposed to any sort of sexualised and violent behaviour.

Colin: Absolutely. I remember we have spoken before on this podcast about this ‘bubble’ Metaverse introduced?

Natalie: Yes, so after previous problems in the Metaverse with sexual harassment, Meta introduced the ‘safe zone’ – sort of like a bubble or cushion that protects the avatar from being touched. But that isn’t foolproof, if a user is being attacked, they might not be able to turn that on in time…a young person playing might not even know that’s an option, either, if their parent has set up their account for them. And also, really importantly, if that safe zone is on then no one can touch you, see you and you can’t interact with them in any way Is a young person really going to want to play like that? Or are they going to want to interact with others as part of the fun?

Colin: That’s a good point. Now obviously for any listeners parents, carers and people who support children and young people in any sense, this is a really frightening story and a frightening prospect too, if they know someone in their care is using VR or the Metaverse. What advice can we give, Natalie?

Natalie: Yeah, definitely scary stuff. So first up I’d say, if you don’t know if the young person in your care is using the Metaverse, try and find out. Bring up virtual reality in conversation, casually, and see if they’ve used it. Yes, you might not have a VR headset but maybe their mate does.

Colin: How do we do that casually but intentionally?

Natalie: Always a challenge! So you’re not steaming in there very emotional and saying, “I heard this story about assault on Metaverse, have you been on that behind my back?!”.

Colin: Because they’ll say ‘nope’ to that!

Natalie: Exactly. You’re saying ‘Oh, I was online earlier and saw something about VR headsets. Have you ever seen one of them? I’m not even sure what it is!’, or however works best for you.

Colin: Hopefully they’ll then want to explain it to you!

Natalie: Yeah, and its whatever way feels comfortable for you. Just don’t be emotional or look scared or worried, basically! And if they are using VR, and the Metaverse specifically, absolutely, 100% make sure you both know all of the safety settings and go through all of the onboarding process together. Bear in mind, this is recommended for over 13s but that doesn’t mean it’s completely safe for over 13s. Never leave your child’s safety up to an online platform. Do your research, follow the advice and be in the same physical space if they’re using something like this.

Colin: Great advice, thank you, Natalie. Now after a very quick ad break, we’ll be back with mores safeguarding news and updates.

INEQE Safeguarding Group are proud to launch our 2024 Training Prospectus. In an ever-changing digital environment, we know that standing still means falling behind. Our range of safeguarding webinars are designed for safeguarding and educational professionals who want up to date, credible, high-quality training on today’s safeguarding topics and concerns. To see our full range of upcoming training visit our website ineqe.com or email [email protected].

Colin: Welcome back. So also in the news this week, the Department for Science, Innovation and Technology are asking the public, adult performers and law enforcement for their views on the impact of pornography. The questionnaire, which can be found on their website, will be used in a review of the industry that will go towards making recommendations to the government.

Natalie: What sort of questions are they asking?

Colin: It’s about things like the effect of porn on relationships, mental health and attitudes towards women and girls. And they’re also going to be looking at how AI and virtual reality are impacting both the way porn is made and the way it’s consumed.

Natalie: Now that’s interesting because we’ve talked before on this podcast about how AI is being used, for creating deepfake porn and also, horrifically, for making child sexual abuse imagery.

Colin: We have and that’s obviously deeply concerning. And it’s quite timely because the other story I wanted to mention was about these new figures released by the police showing that more than half of child sexual abuse offences recorded in 2022 were committed by other children, which the police are saying is fuelled by access to violent pornography and smart phones.

Natalie: Wow, okay. Do the figures show what kind of increase that was, percentage or number wise?

Colin: Yes, so these figures come from 42 police forces in both England and Wales. They are reporting that 52% of child sexual abuse offences involved a child aged between 10 and 17 as a suspect or perpetrator, which is up from a third of offences in 2013.

Natalie: Not an insignificant increase, then.

Colin: No, it’s up fairly substantially. Now, Ian Critchley, who’s the national policing lead for child abuse protection and investigation has said that officers don’t want to criminalise a generation of young people for taking and sharing images within consensual relationships.

Natalie: Which is self-generated child sexual abuse material, really. If it’s someone underage taking and sharing imagery of themselves.

Colin: Absolutely. But the data also shows that it isn’t just an increase in these types of incidents with indecent images but also in direct physical abuse. Critchley went on to say that the gender-based crime of boys committing offences against girls has been “exacerbated” by the accessibility to violent pornography and that violent pornography has been normalised.

Natalie: It’s very concerning, this type of content is just a ‘click’ away. At any time.

Colin: It is, and we need help and support young people to know that this type of behaviour isn’t ‘normal’ or standard – especially any form of violent pornography.

Natalie: And it’s also so important that young people understand the implications of taking, creating and sharing sexual imagery of themselves. That one, it is against the law. In some parts of the UK this is known as child-on-child abuse. Two, it’s incredibly risky, you don’t really know where that might end up or who it might end up with. In some cases, this type of content has been used to bully, shame and blackmail other young people. And three, it might be something they live to regret, sadly.

Colin: Very true, we need to make sure that the young people in our care are educated and empowered on this which is so important. Furthermore, we also need to ensure that if a young person has made a mistake or has become a victim to this type of abuse, they have access to help and as adults we should never shame or blame but seek to support. And for anyone who’s interested in taking part in that survey, as I said it’s available on the Department for Science, Innovation and Technology’s gov.uk site and it closes on the 7th of March.

Colin: Let’s take a look at some the safeguarding stories hitting the headlines here in Northern Ireland. Natalie?

Natalie: Yes, so this week a list of alleged drug dealers was posted online after the suicide of a vulnerable young person at the end of last year. The list included details such as names, phone numbers, car registrations of County Armagh residents alleged to be involved in drug dealing and criminal activities. Our CEO and online safety expert Jim Gamble spoke out about the incident, saying that: “No one supports anyone involved in drug dealing and criminality, but there is no way of verifying the credibility of the information, the allegations or indeed of the intent of those people creating these lists.” and that, “those creating these online posts are lowering themselves to the level of those they think they are exposing and at worst committing a criminal offence themselves.”

Colin: Absolutely, as Jim says, whilst the people posting these lists might think there is a sort of greater good here, they could actually end up with a criminal charge themselves. We have to always remember that posting any sort of allegation or accusation online has potential consequences.

Natalie: Legal consequences, to be specific!

Colin: Exactly! Now, a headteacher from Belfast has spoken about how children from low-income working families who don’t qualify for free school meals are coming to class hungry. Katrina Moore, who is principal of Malone Integrated College says that watching a child go hungry was heartbreaking.

Natalie: Gosh, I’m sure.

Colin: Ms Moore is temporarily using grant money to provide free food for all the pupils, adding that she’s very conscious of those young people she knows probably aren’t going to get a hot meal at any time during the day, whether that’s breakfast, dinner or an evening meal.

Natalie: That really is heartbreaking and just shows how funding issues for schools and the current cost of living crisis is really impacting families across the board.

Colin: Absolutely. Natalie, any other NI stories from this week?

Natalie: Yes, I wanted to talk about these figures released by the NSPCC. Basically, the charity has released a report about child sexual offences using stats from the PSNI that show that recorded offences against children and young people under 18 are the highest they’ve been since they started tracking them almost two decades ago.

Colin: Oh yes, I did see this, too. Remind me what the number of offences is?

Natalie: So according to these figures released by the NSPCC, there were 2,315 offences in 2022/23.

Colin: Against children and young people under 18?

Natalie: Yep, exactly. And for context, the figures were the highest on record across the UK also.

Colin: Okay. And have the NSPCC made any recommendations or comments?

Natalie: Yes, the NSPCC have called for immediate action, increased funding for prevention programs, as well as better support for victims and stronger law enforcement measures.

Colin: Okay, well you can’t disagree with that!

Natalie: Okay. Thanks, Colin. Now, I think it’s just about that time…

Colin: What time’s that?

Natalie: Oh you know! It’s…

Colin: Safeguarding success story of the week! Take it away, Nat.

Natalie: That’s all we have time for this week. Big thanks for listening and please join us next time for more safeguarding news and advice.

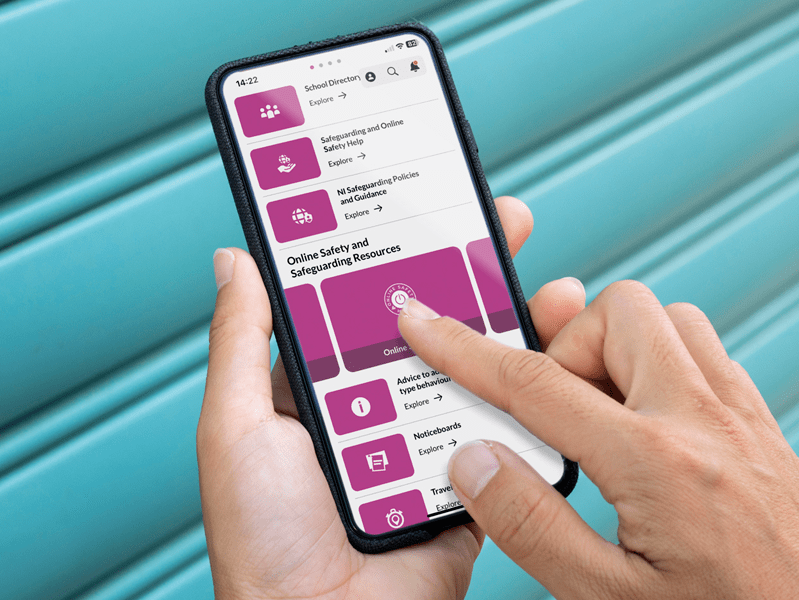

Colin: In the meantime, remember you can find us on social media by searching for ‘Safer Schools NI’. You can also access even more safeguarding advice and a whole host of resources on our Safer Schools NI App, which is free to download on your phone or device’s app store.

Natalie: Don’t forget to add this podcast to your favourites and if you’ve enjoyed this episode, we’d love for you to share it with your friends, family and colleagues.

Colin: Until next time…

Both: Stay safe!

Join our Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.

Who are your Trusted Adults?

The Trusted Adult video explains who young people might speak to and includes examples of trusted adults, charities and organisations.

Pause, Think and Plan

Use our video for guidance and advice around constructing conversations about the online world with the children in your care.