Last Updated on 30th September 2022

Many of today’s most popular platforms have begun to release more and more features and updates that claim to help parents and carers protect younger users. Instagram has been releasing updates almost monthly, with many features building on its promise to improve the impact the platform has after significant negative press.

According to Ofcom, 99% of UK children went online in 2021, with 62% saying they had more than one online profile. With Instagram being one of the most popular online platforms, it’s important to be mindful of every update they release. We’ve taken a look at the latest Instagram updates to help parents, carers, and safeguarding professionals be aware of the safety features available to them on the app – and to know how effective these settings actually are.

What’s New: Meta has expanded Instagram’s current parental control tools as well as the sensitive content control feature to include new search restrictions and inappropriate content filters. It has reported that it is working on features that will help filter out nudity and sexualised content from in-platform messaging, but these are still in development. We will continue to monitor any testing or developments as they progress.

1. Family Center

What is it?

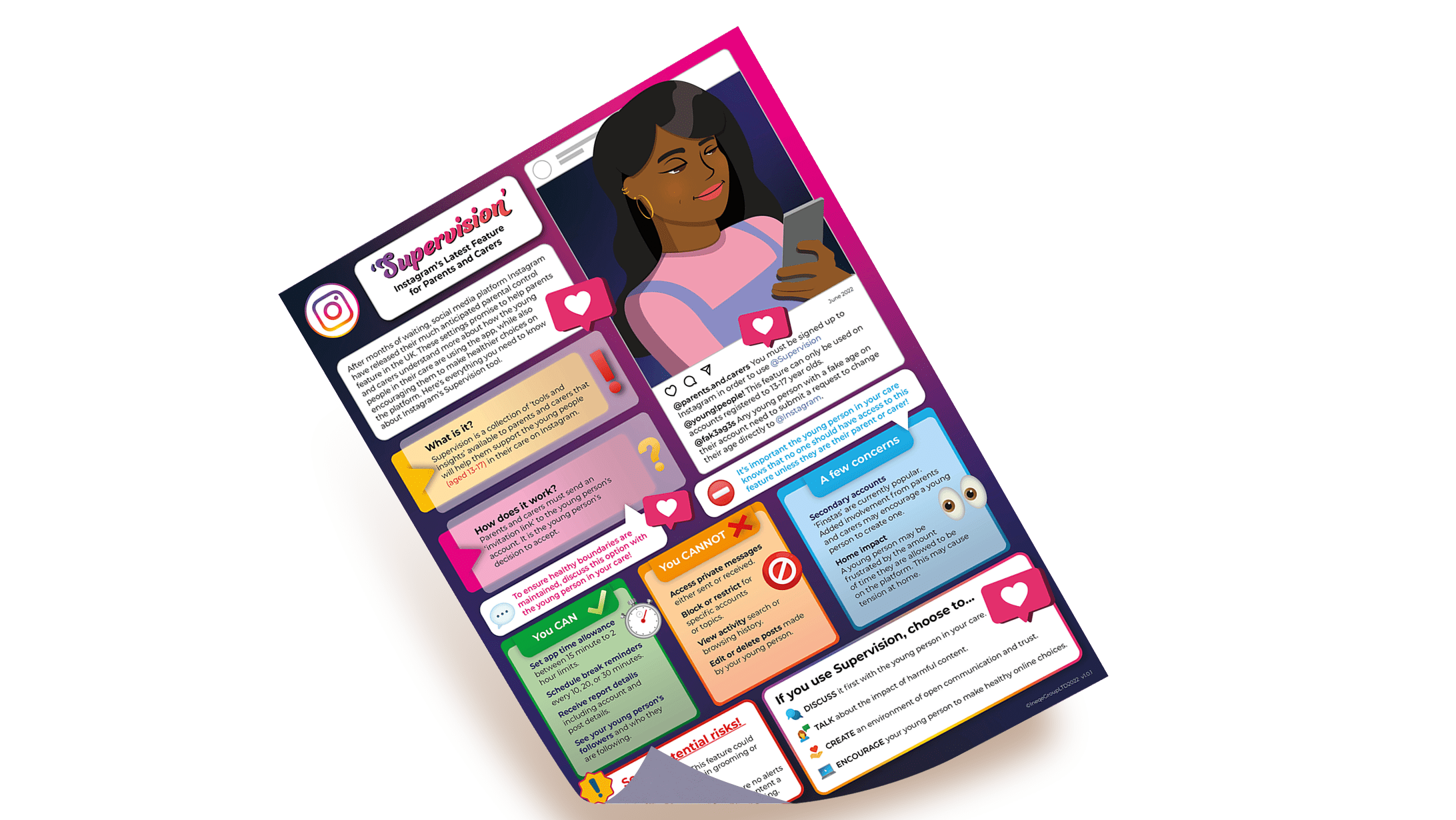

Instagram has been releasing different types of parental controls since the start of 2022, and has finally released a comprehensive parents’ guide and education hub within their ‘Supervision’ tools, launched in the UK on 14th June, 2022. Parent company Meta calls this collection of parental controls ‘Family Center’.

How it works

Before parents can implement Family Center, an ‘invitation link’ must be sent to the young person’s account. It is the young person’s decision to accept – a parent or carer cannot enforce this without their permission. To ensure you maintain a healthy relationship and ensure boundaries remain in place, discuss this option with the young person in your care first.

You can find all of these tools available under ‘Supervision’ in the individual account Settings tab on Instagram.

Instagram’s Supervision features include:

Areas of Concern

Potential Risks

2. Sensitive Content Control

What is it?

The Sensitive Content Control feature allows users to have more power over the type of content they see on Instagram. While this was previously limited to the ‘Explore’ page, it has now been extended to wherever Instagram makes recommendations (such as the ‘Search’ and ‘Hashtag’ pages, as well as a user’s personal feed). Instagram are planning to release a shortcut to this feature on the ‘Explore’ page, a ‘Not Interested’ button to allow users to quickly inform platform algorithms of the content they do not wish to see, and a ‘Nudity filter’ for chats that is in early stages of development (but these features have not been given a release date).

A ‘hidden words’ feature has also been added to privacy settings, which will allow parents, carers, and young people to set a restriction on specific words, phrases, numbers, or emojis that might be upsetting, offensive, or triggering to them. DMs, posts, and comments containing these words will be automatically filtered from view in order to protect the user.

How it works

Sensitive content is defined by Instagram as “posts that don’t necessarily break our rules, but could potentially be upsetting to some people.” This includes content that is violent, sexually explicit/suggestive, or regulated drug/alcohol products.

There are three options for the ‘level’ of sensitive content that can appear. These options have been renamed to help users better understand how this filtering system works.

Sensitive Controls and Hidden Words can be found within the ‘Settings’ on an individual Instagram account. Sensitive Controls is found under ‘Account’ settings and Hidden words can be added and customised within the ‘Privacy’.

Remember: Instagram’s minimum age requirement is 13. Some children may lie about their age and, if they say they are over 18, could be exposed to sensitive content. Talk to the children in your care about age restrictions and why it’s important to follow them.

Areas of Concern

Potential Risks

3. In-App Nudges

What is it?

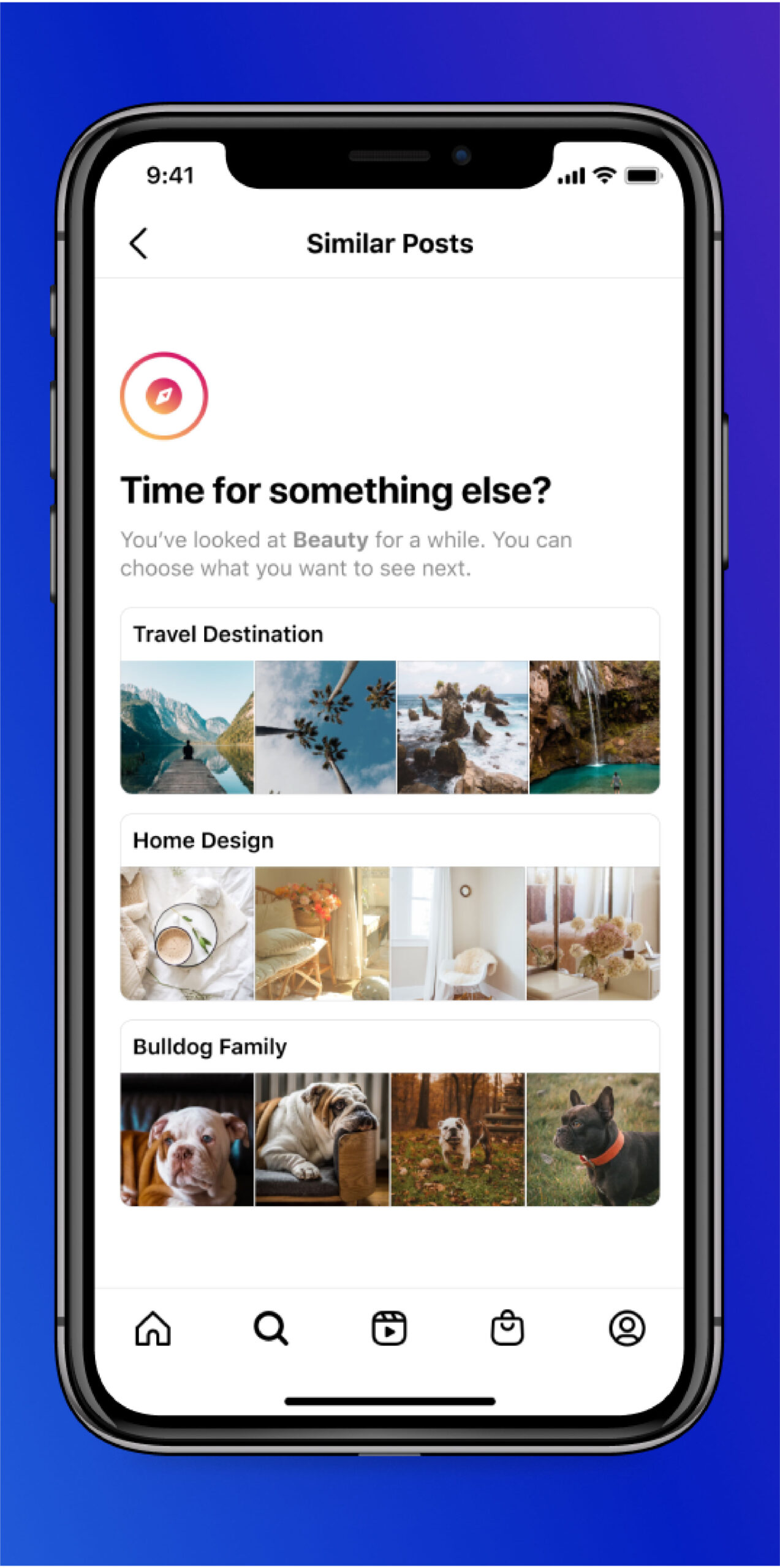

This feature is designed to help encourage young people using the platform to ‘discover something new’ while also excluding topics that may be associated with appearance comparison or fixation. The ‘nudge’ will use a new type of notification to interrupt a user if they are spending too much time browsing posts with themes that might make them anxious, upset, or self-conscious. They will then be redirected to an array of ‘positive’ options to choose from to ‘explore next’.

Areas of Concern

Potential Risks

4. ‘Take a break’

What is it?

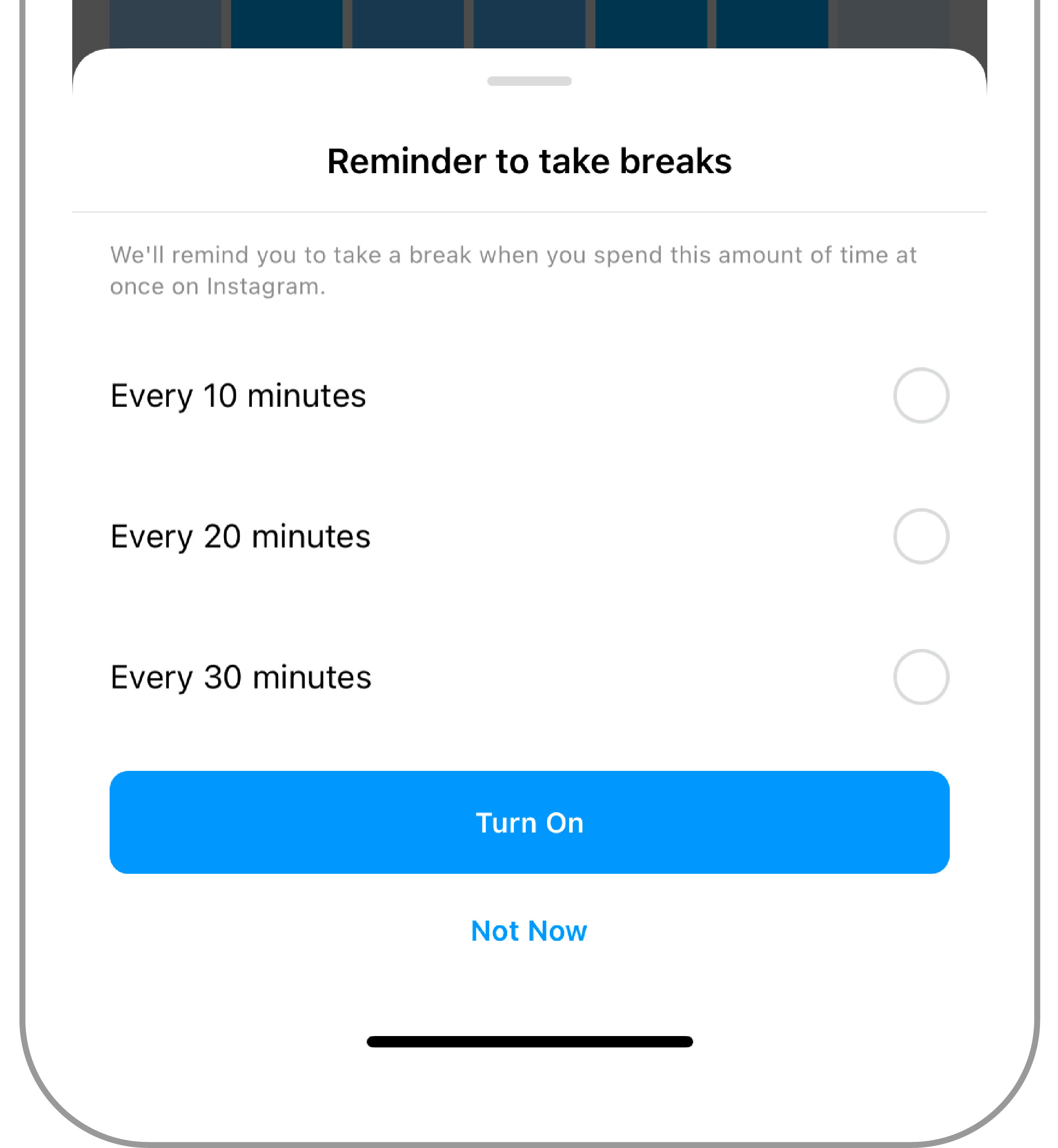

This updated feature will allow users to enable a notification system that will interrupt their in-app activity with a ‘Time to take a break?’ message after a select period: 10, 20, or 30 minutes. A list of possible alternative activities (such as ‘Go for a walk’, ‘Listen to music’, or ‘Write down what you’re thinking’) may also appear. Initial testing showed that 90% of users kept this feature enabled.

‘Break’ reminders will also feature well-known Instagram creators to increase screentime awareness and encourage users to take a break from the online environment.

Areas of Concern

Potential Risks

5. Partnership with Yoti

What is it?

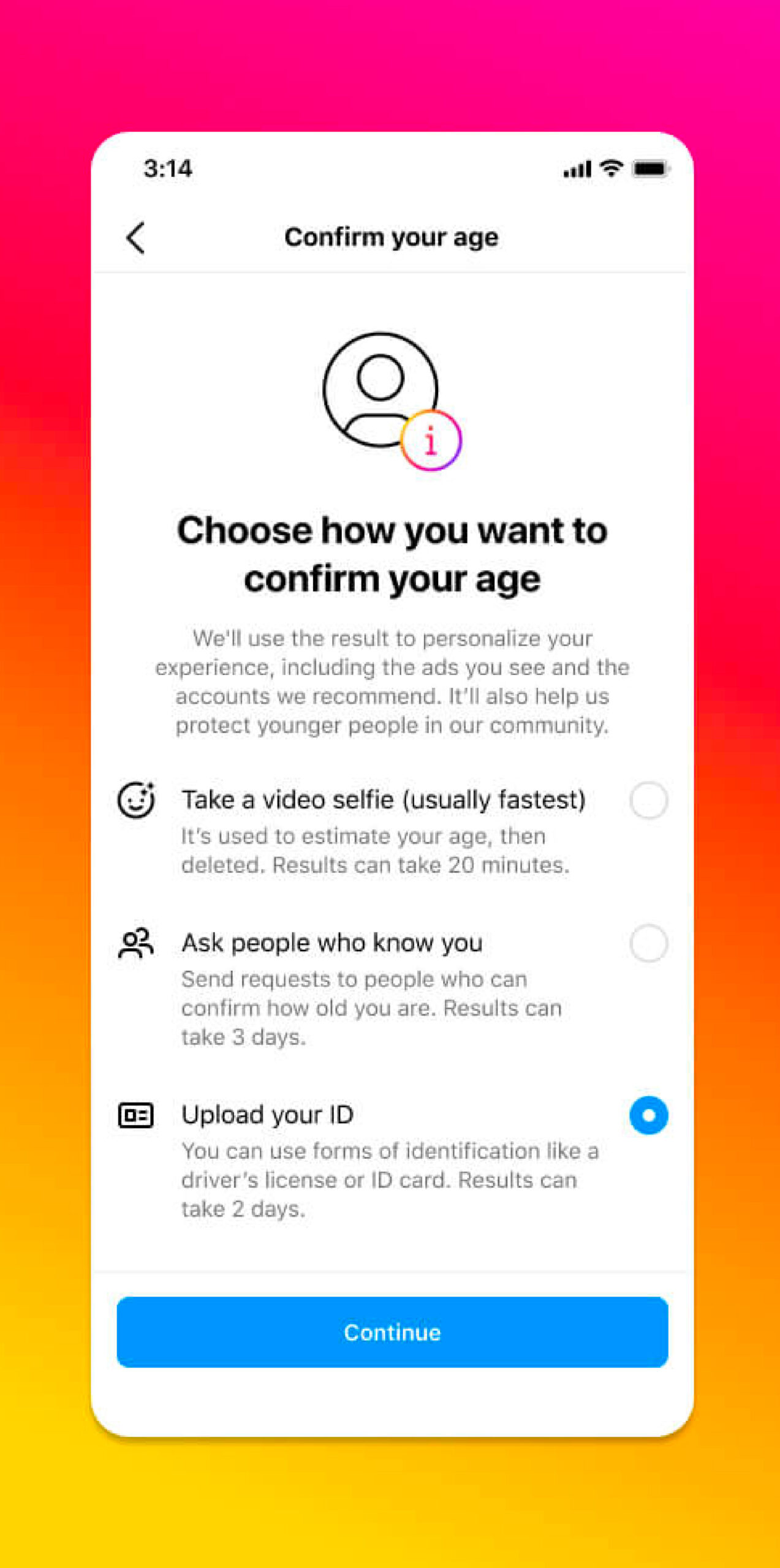

On June 23rd, 2022, Instagram announced a partnership with Yoti – the age verification system approved by the Home Office. They claim Yoti’s ‘privacy-preserving’ technology will help them provide options for users to verify their age on the platform, allowing them to offer more ‘age-appropriate experiences’. Yoti uses Artificial Intelligence to estimate the age of a user and verify their identity against a provided image, piece of ID, and/or short video selfie. This detects whether a user has given the wrong age or if they have taken a photo from the internet. Yoti is currently used by platforms like Yubo and is GDPR compliant.

This testing is only available to selected users in the US. We will continue to monitor its progress and will update our information once it is released in the UK.

Join our Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.