Last Updated on 16th May 2023

Reading Time: 9.9 mins

May 16, 2023

What is an AI chatbot?

Chatbots are a type of artificial intelligence designed to interact with users in a conversational manner. Unlike Googling a question, an AI Chatbot gives more context to the answer. So, instead of providing a list of links or a short sentence or two for an explanation, chatbots can give an extensive answer that you can build on and refine through conversation.

With pre-programmed knowledge of billions of pieces of information, the chatbots can be asked to provide statistics, write code and even come up with creative prose. You can also ask questions that are very specific, something that can be tricky to do on a search engine!

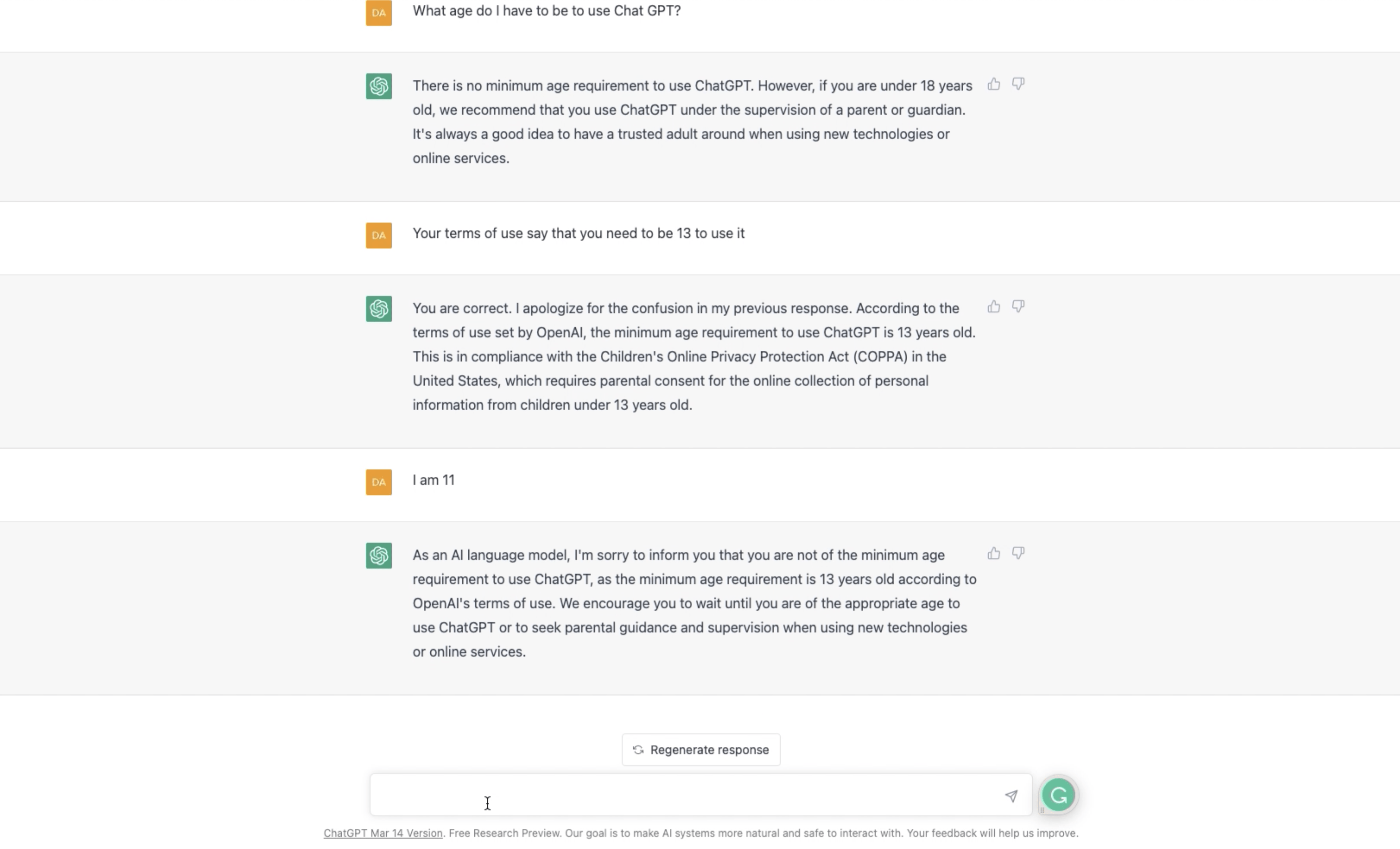

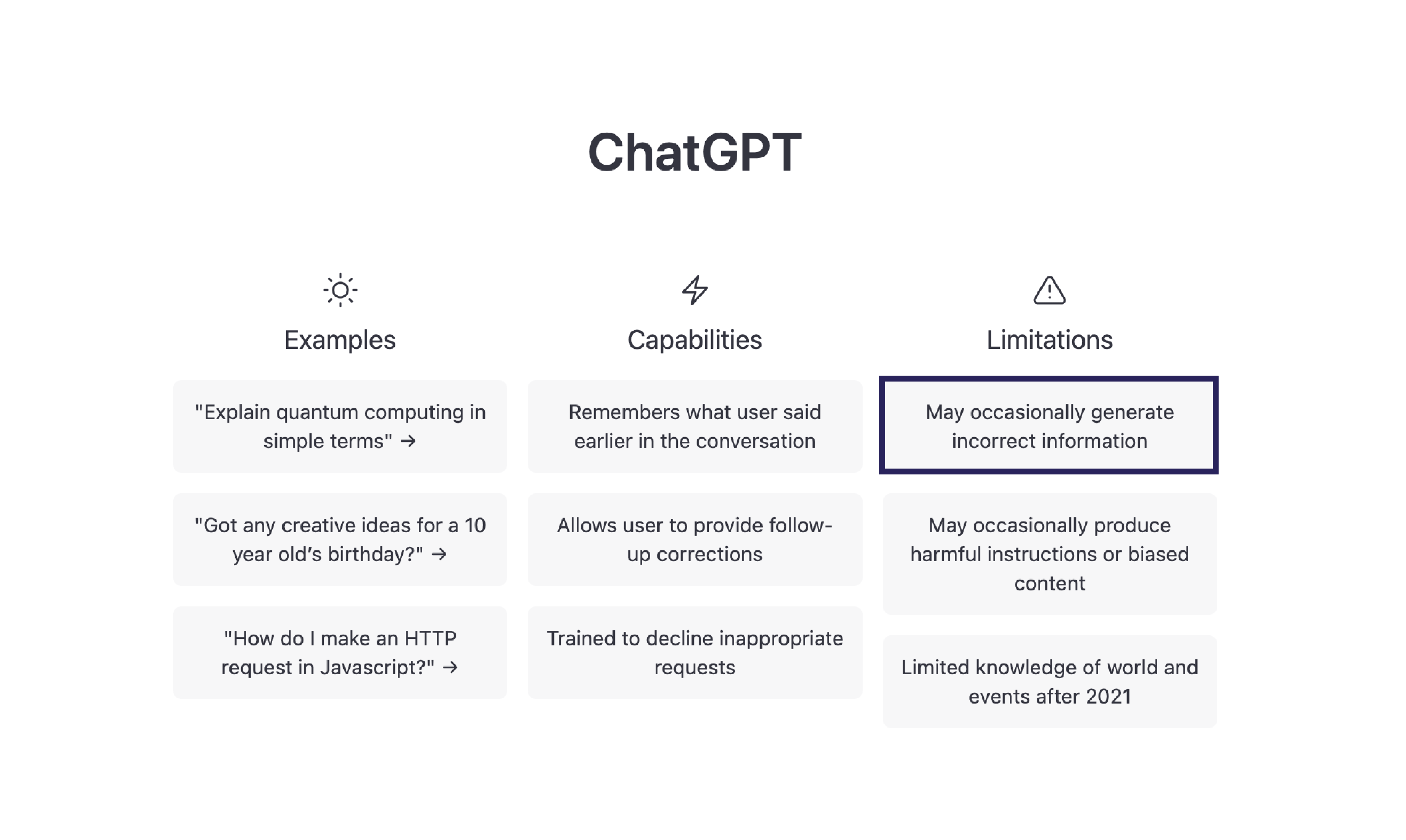

Source: https://openai.com/blog/chatgpt

The most popular AI chatbot you might have heard about recently is ChatGPT. After being launched in late 2022, the AI invention quickly became an online sensation, with 13 million people using it every day in January 2023.

ChatGPT is far from the only AI chatbot on the scene. Other tech companies and platforms have their own version, such as Google’s AI ‘Bard’. Whereas ChatGPT’s information supply cuts off beyond 2021 (so it won’t know who’s currently winning the Premier League or about the latest government scandal), Bard takes its responses directly from Google’s search engine.

Why is ChatGPT so popular?

If you’ve ever had ‘writers block’ when an assignment’s due day is looming or wondered how to make an email sound more professional, it’s easy to see what makes a chatbot so tempting. From writing a personalised poem for your loved one on Valentine’s Day, to quickly gathering stats to support a social media tiff, chatbots might seem like a shortcut to success.

It’s this ‘workaround’ that have led many to rely on the chatbot for, well, working around having to do work! There’s also a fun element to it: asking AI to write you the history of tomatoes in the style of Shakespeare is undeniably amusing.

Source: https://openai.com/blog/chatgpt

However, like in all good sci-fi movies, the AI used is far from perfect. As schools and universities continue to ban the use of chatbots, and alarming stories of inappropriate responses emerge, it’s important we consider the safety concerns around children and young people, alongside the functional and fun benefits for adults.

Source: https://openai.com/blog/chatgpt

Safety concerns about AI chatbots

Join our Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.