Last Updated on 29th April 2022

While tech companies are constantly releasing new updates and features for mobile phones, laptops, and tablets, one of Apple’s upcoming releases is getting a lot of attention from safeguarding professionals as well as parents and carers.

We’ve taken a look at the ‘Communication Safety in Messages’ feature to help you know everything you need to before it launches in the UK.

What is it?

This new safety feature is referred to as ‘Communication Safety in Messages’ – and pretty much does what it says on the tin. It will allow parents and carers to activate alerts and warnings on their children’s phones, specifically for images that contain nudity and are sent or received over the Messages app on iOS devices.

Why is it being introduced?

The aim is to help children and young people make better choices when it comes to receiving and sending sexually explicit images. It also allows parents and carers to play a more active role in the online interactions of their children and young people, while also combatting the spread of self-generated images and Child Sexual Abuse Material (CSAM).

Though originally announced in summer 2021 as part of a range of updates, the Communication Safety in Messages is only being released this year after facing controversy around design structures. Originally, parents and carers would be automatically alerted if a child under 13 sent or received sexually explicit images, but there were concerns around this exploiting user privacy and LGBTQ+ children being at risk of being outed.

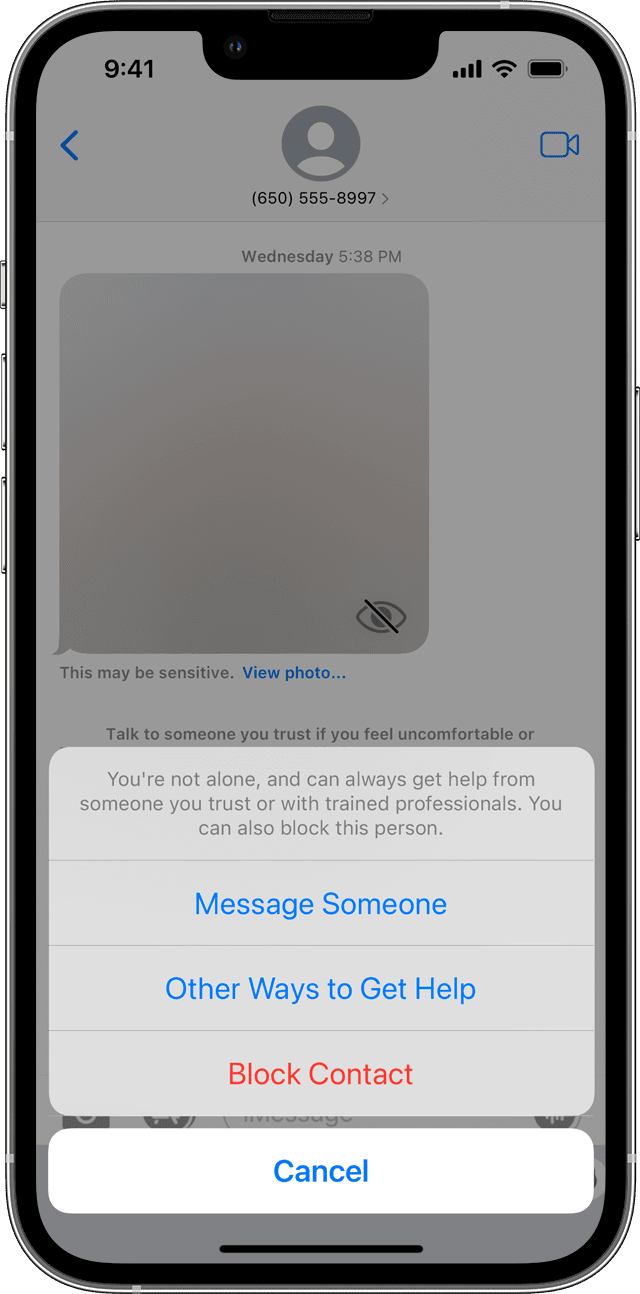

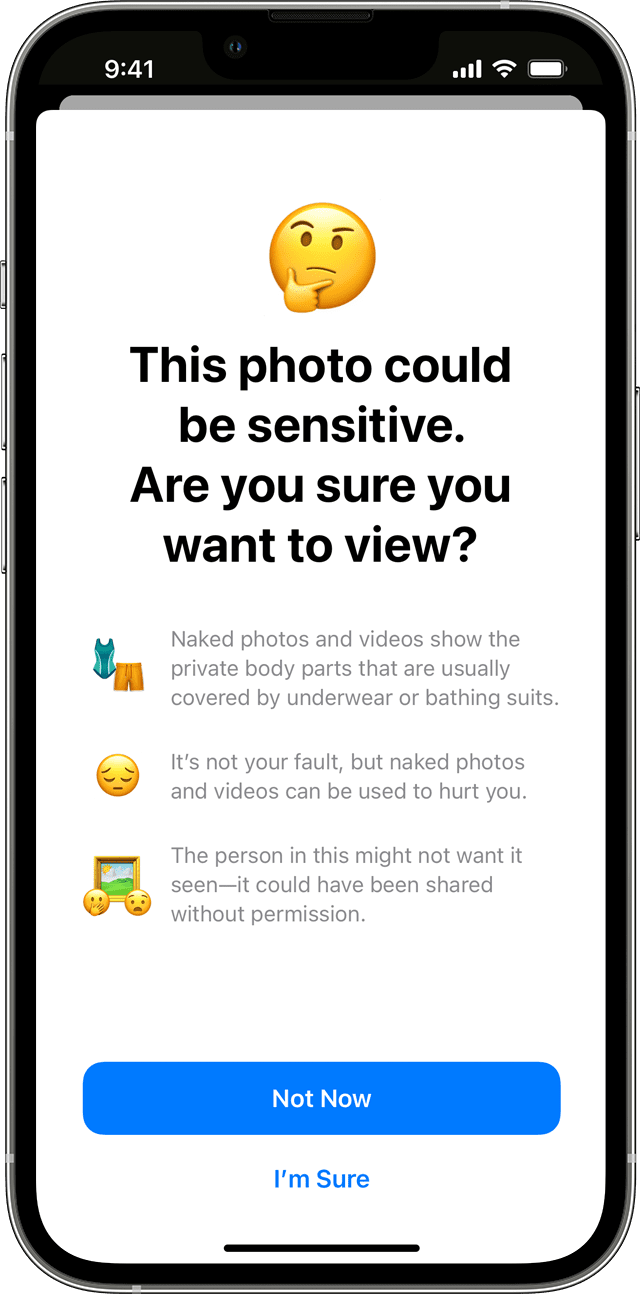

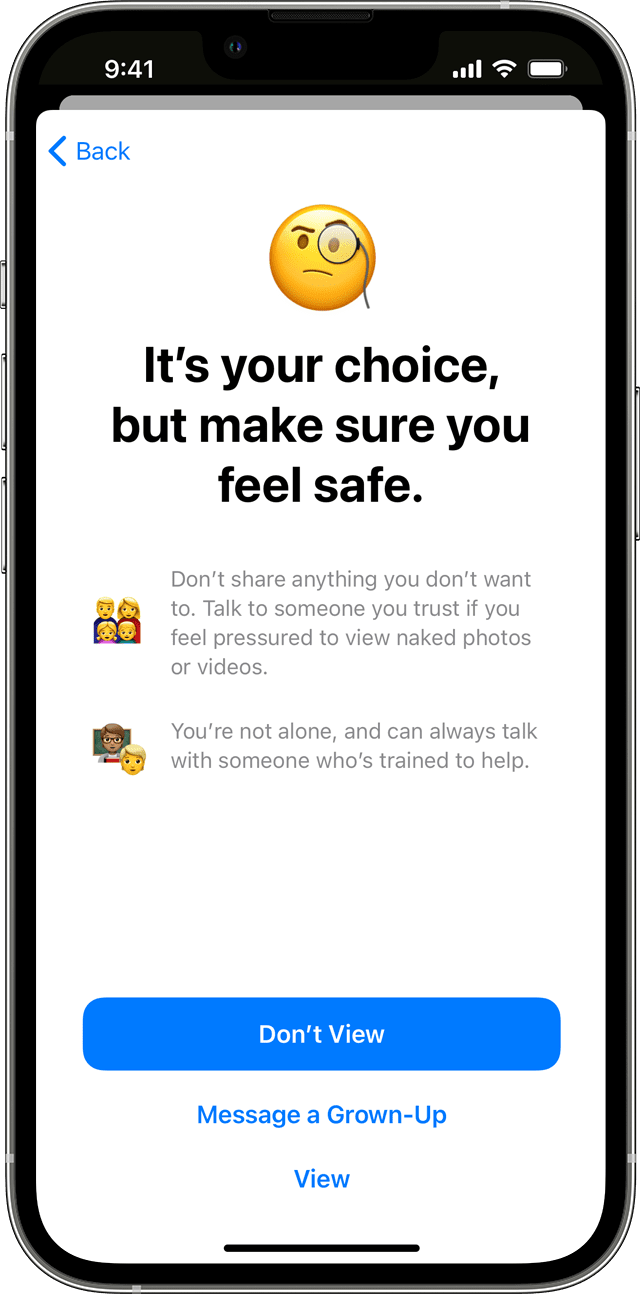

Apple have removed the automatic alert and instead created an intervention system that allows the child to stop themselves from opening the image and reaching out for help if they need it. There are hopes this will aid in the effort to lessen self-generated images and CSAM from being received and sent by children and young people. They are also releasing a safer search function within Spotlight, Siri, and Safari to prompt users to signposts for help if they are performing searches for topics relating to child sexual abuse.

It is unclear when or how these updates will appear in the UK, and which help services would be referenced for UK users.

“Self-generated sexual imagery of children aged 7-10 years old has increased three-fold making it the fasted growing age group. In 2020 there were 8,000 instances. In 2021 there were 27,000 – a 235% increase. [Meanwhile] self-generated content of children aged 11-13 remains the biggest age group for this kind of material. In 2021, 147,900 reports contained self-generated material involving children aged between 11 and 13. This is a 167% increase [from 2020].”

It’s important to note that Apple will not have access to these images. All processing is done by on-device machine learning, and messages retain end-to-end encryption. This feature is due to be rolled out soon in the UK (as well as Canada, New Zealand, and Australia), and has already been released in the US.

How does it work?

This will primarily work by using on-device machine learning (technology built into the device that is able to detect certain objects or content in images without human interference) to scan photos and attachments sent over Messages. This Communication Safety feature functions in two different ways depending on whether or not the child or young person in your care is the sender or receiver.

If an explicit photo is received by a child…

If a child attempts to send an explicit photo…

This is an opt-in feature which means parents will have to enable it in their Family Sharing features for it to be active on their family’s devices. Children and young people under the age of 18 must also have a connected Apple ID (using their correct date of birth) in order for this feature to work properly.

Is this the same as the CSAM (Child Sexual Abuse Material) detection feature?

The ‘Communication Safety’ feature was first announced alongside another update that aimed to tackle increasing digital sexual abuse material. The CSAM detection feature would use technology to scan photos for recognised CSAM images and report them to the appropriate services (the National Center for Missing and Exploited Children in the US). This feature faced overwhelming backlash in concerns for user privacy, and Apple has yet to provide an update on its release.

Are there any risks?

Join our Online Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.