Last Updated on 18th December 2025

Safeguarding Students

A Guide for Schools on Preventing and Responding to AI-Generated Image Exploitation

Reading Time: 10.8 mins

November 18, 2025

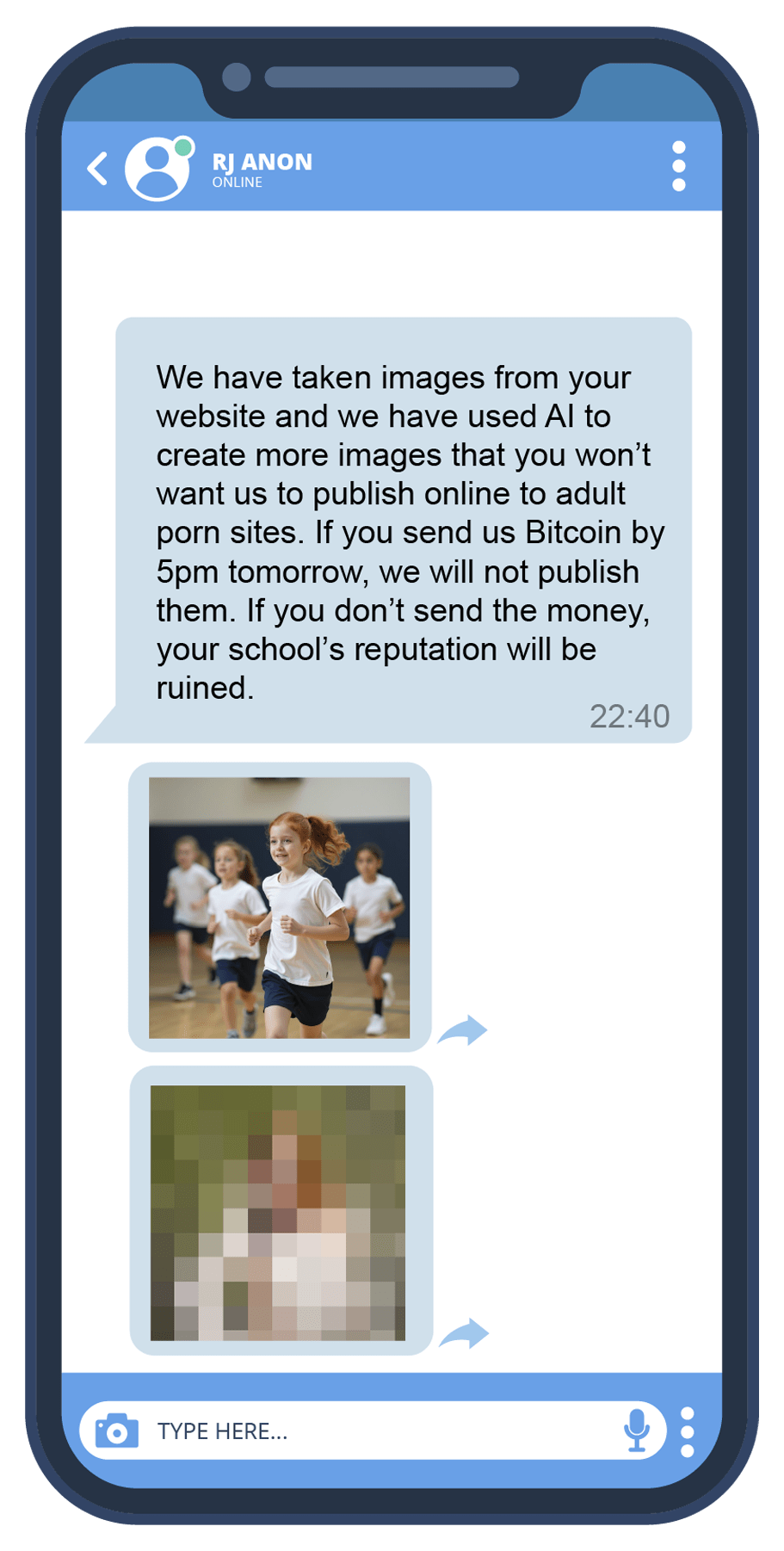

A small number of schools are reporting incidents where photos, often of girls, are being copied from their websites and social media channels. Scammers are then using Artificial Intelligence (AI) to sexualise the images in an attempt to blackmail schools, targeting both students and staff.

The increasing prevalence and accessibility of AI image generation tools is a worrying development, as it makes these scams more likely to occur. This guide provides schools with information on how to prepare for, prevent, respond to, and report incidents of AI-generated image exploitation.

*These images are AI-generated and do not depict real children.

Preparing for and Preventing Online Extortion

It’s easy to think, ‘it won’t happen here’, but staying ahead of online risks is key to minimising harm. By proactively educating your school community, you empower them to recognise threats and respond appropriately. You can do this by:

If Your School is Targeted in an Online Extortion Scam

It can be distressing to have the threat of student images being misused. Remember to stay calm and do the following.

1. Report and Respond

Capture the Evidence Carefully

It is only a good idea to screenshot, save or otherwise capture evidence of online abuse or scams if you are sure there are no indecent images of children contained within the material. Sharing or forwarding the images, even within the school, can cause further harm. Whilst there are limited defences for possessing this type of imagery (including coming across it in the course of your employment) this is limited to particular roles and conditions. If you find an image, do not share it or show it to others. Secure the device/s, inform your Safeguarding Lead and call the police.

2. Support

Consider who has been targeted based on the images. If they are sexually explicit, do not view them again. Instead, seek police assistance in identifying those affected and ensure they receive the necessary support.

Supporting the Victim(s)

Offer immediate support to those involved and reassure them that it is not their fault. You might see a range of reactions from laughter to fear of how this will impact them in the future. It may be time to consider further options for support such as pastoral care, counselling or similar professional services.

Communication with Parents

Consider communicating with your school’s legal team. Creating template letters may help to ensure a consistent tone and appropriate messaging to specific groups, for example, parents/carers of children in the images, other parents/carers and the wider school community, including staff and teachers.

How to detect AI-generated imagery

REPORT

Further Resources

This article was originally published on 18th February 2025

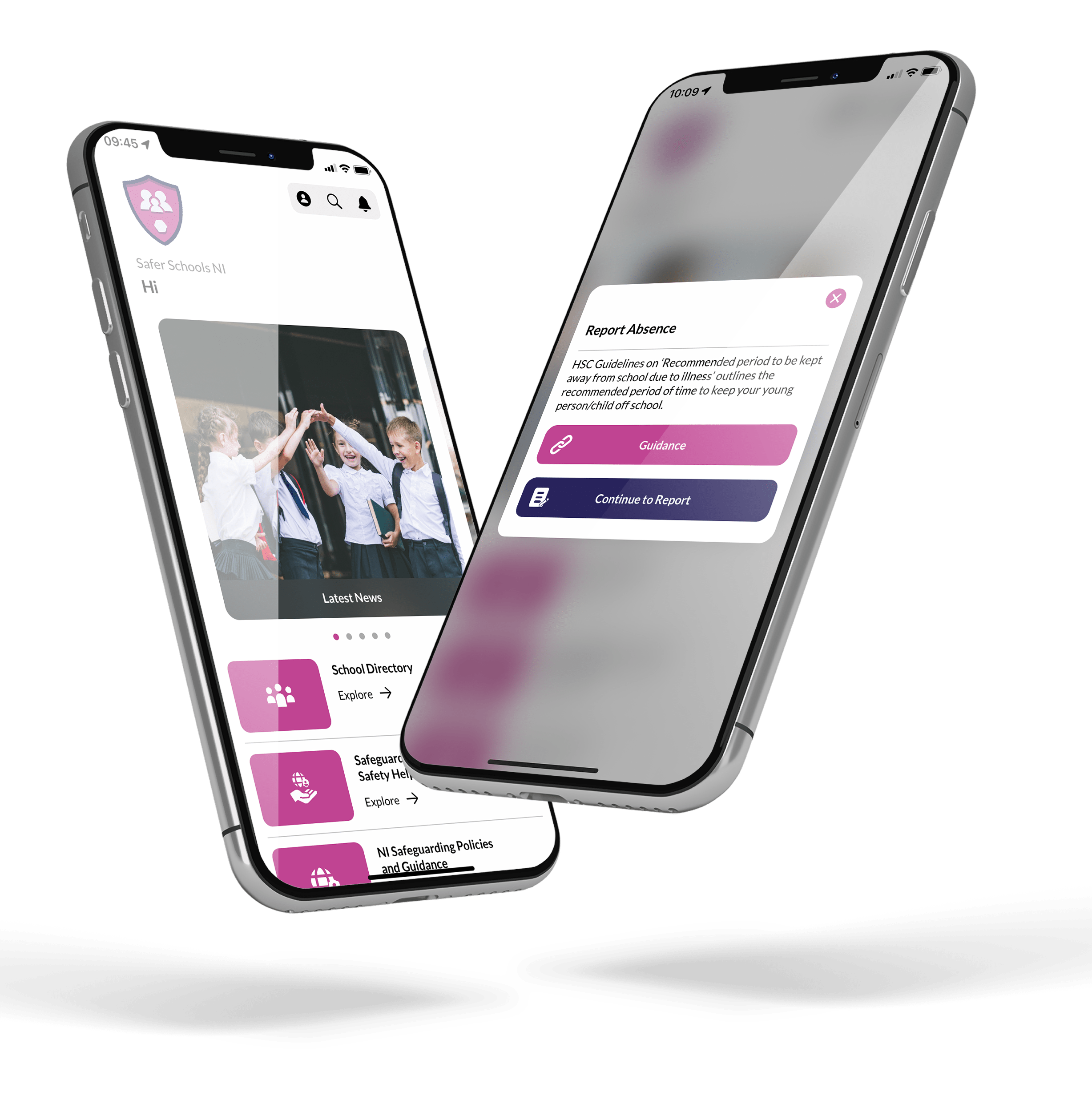

What Is the Safer Schools NI App?

In an ever-evolving online world, keeping up-to-date with the latest news, trends and risks can be difficult. Finding credible and relevant resources can be even harder. With the Safer Schools NI App, your school community will be kept informed on the fast-paced changes of the digital landscape, designed to be a one-stop-shop for accessing essential safeguarding information, advice, and guidance.

What Is the Teach Hub?

The Safer Schools library of resources created by teachers for teachers.

Our team have produced a range of high-quality lesson plans, PowerPoints, videos and worksheets for you to use in your classroom. Covering topics such as catfishing, influencers and gaming, these will be your go-to when it comes to addressing the big issues facing today’s children and young people.

Join our Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.