Last Updated on 19th November 2021

What is it?

The Age Appropriate Design Code (sometimes referred to as The Children’s Code) is a new code of practice that sets out standards of age-appropriate design for “information society services” that are likely to be accessed by children. In other words, it’s new requirements for any companies that offer services online that are likely to be accessed by children.

The Code itself contains 15 standards that any online services must adhere to and covers areas such as privacy, transparency, and data sharing. You can find the full list of standards below. It first came into force in September 2020, but companies were given a 12-month transition period to comply, which ended on September 2nd, 2021.

Why Has the Code Been Created?

From playing games on parents’ phones and watching cartoons on YouTube, to getting their own devices and joining social media, children and young people are using the online world every day. However, the internet wasn’t created with safeguarding children in mind and nor were any previous rules and regulations that the companies who operate online must follow. This is especially important now due to personal data protection.

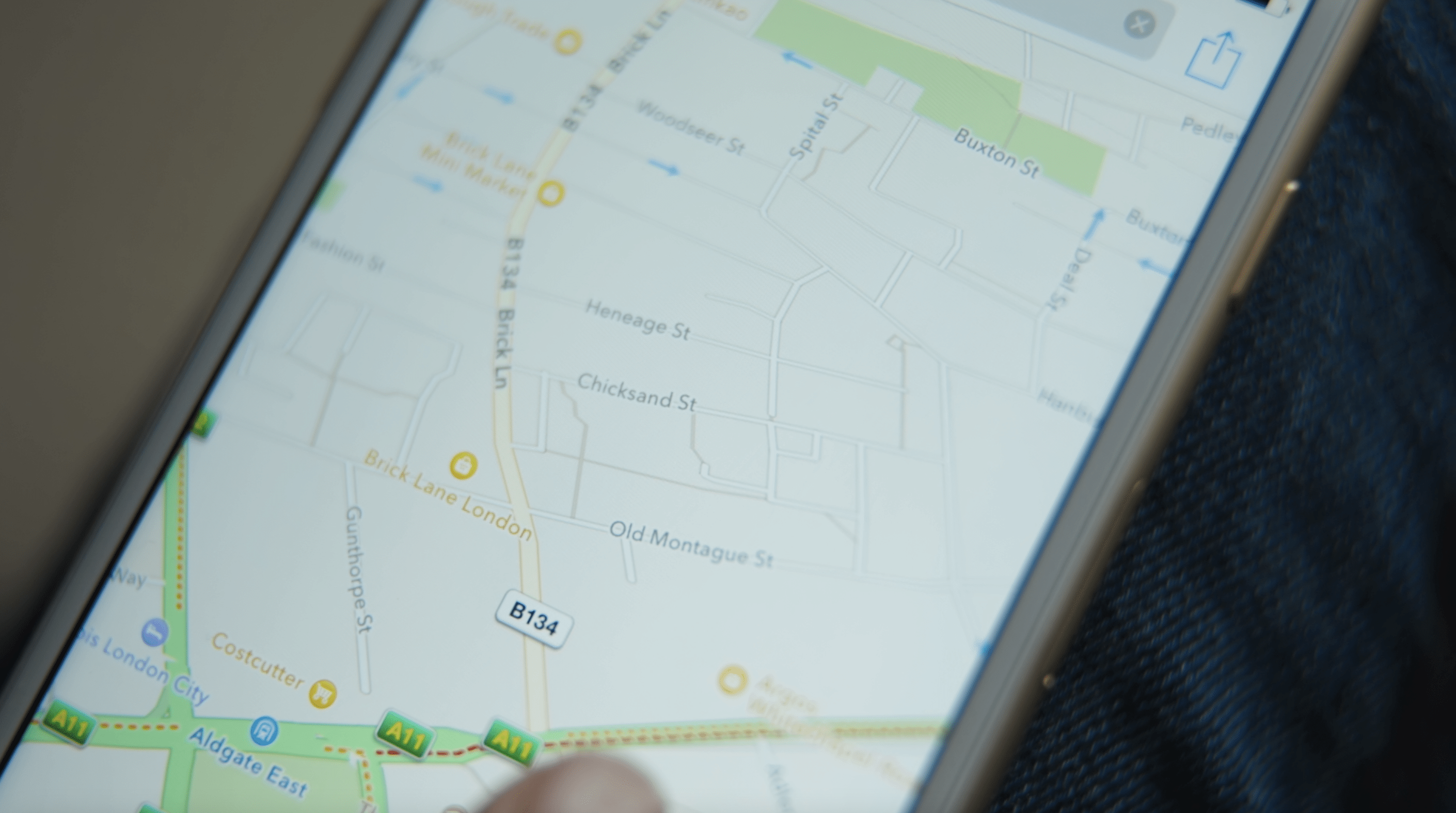

As adults, we hopefully have more understanding of what we’re agreeing to online. For example, we might understand what we’re signing up to when we agree to data usage pop-ups, whilst children might not. Or when we allow an app to use geolocation, we understand the risks behind location sharing, where children may just see the novelty in sharing this information.

These new standards are about making the digital space where children learn, play and socialise a safer place to be.

The code stems from the United Nations Convention on the Rights of the Child (UNCRC). It recognises the special safeguards children need in all aspects of their life and within GDPR laws. This code is the first of its kind, with the potential to influence changes to data protection policies in other countries.

For platforms that don’t comply, there could be serious consequences. The ICO retains enforcement powers under GDPR and other relevant laws. If companies breach the age-appropriate design code of practice, they can be served with warnings, notices, and fines.

Who Created It?

The Code comes from The United Kingdom’s Information Commissioner’s Office (ICO), who are the UK’s independent body that upholds information rights. They are responsible for legislation such as the Data Protection Act, GDPR, and Freedom of Information Act. This new code comes under their remit due to its relevancy to information rights, data protection and privacy of electronic communications.

Who Does It Apply To?

The code applies to companies that fall under the bracket of information society services. Simply put, this is any business that provides a service online in exchange for money. It doesn’t necessarily mean the user is paying the company directly, the company could be gaining money through advertising and/or data.

This includes:

It’s not just UK-based companies that are affected; even non-UK companies will have to comply if they process the personal data of UK children.

This means apps and websites such as YouTube, Facebook, and Google will all have to adhere to the new standards. Even if the service isn’t necessarily aimed at children, the code must be implemented if it can be accessed by children under 18.

What Changes Will I See?

There have been changes across thousands of apps and websites throughout the last 12 months, some more noticeable than others. Changes will have included:

Further Resources

Join our Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.